- Insights by Aiverse

- Posts

- Consumer AI: Part 2 of the Ultimate Race

Consumer AI: Part 2 of the Ultimate Race

A look into what companies are actually building to win the consumer wearable AI space.

Hello from the beyond! This is Voyager, the only designers-aboard spaceflight navigating you through the AI-UX universe.

We're the Find My iPhone to your AirPods. We help you when you get lost in the AI universe.

We’re growing - welcome new subs!

This is part 2 of a juicy piece, and just before biting into it, in 10 secs, here’s what I’m writing about today —

🥩 The race to win Consumer Wearable AI continues (part 2)

What they’re actually brewing — The Game-changer move, with all its AI-UX interactions

🔥 Hot & spicy AI-UX: AI in a smartphone

🤔 Making sense of the future AI-UX promise

👿 The other side of the AI promise

🤣 Memes of the day

There are 4 AI innovation trends that are taking place under our noses —

Dynamic Interfaces

Ephemeral Interfaces

aiOS → wrote about it last time

Screenless UX → today’s post

The Race to Win Consumer Wearable AI (Part 2) —

The ULTIMATE deep dive into AI wearables, your daily wear swag. It’s a gold rush. People are running to build THE wearable, and there can only be a few winners - given that there’s only so many things you can wear at a time.

We’re diving into —

Pin: Humane,

Pocket Device: Rabbit,

Pendant: Tab, Rewind’s Limitless,

(New) iPhone: [a secret trio product], ReALM,

Glasses: Meta’s Ray Bans, Brilliant’s Frame,

Real Tony Stark stuff.

What’s actually brewing under…

1-3. Humane, Rabbit, Tab

We talked about these products in our last newsletter.

Update on Tab —

They are now called Friend. The mission is unknown. The new design is wow!

This is Part 2: Rewind’s limitless, ReALM, Secret trio product, Meta’s Ray Bans, Brilliant’s Frame.

4. Rewind’s limitless

The pendant — source: Limitless

Overview

An audio only input pendant

Restricted to a specific environment - workplace.

Its mission - become more productive at work.

The Game-changer move

I think constraining the environment could be the winning strategy.

When voice assistants were rising, Siri lost really bad. You could talk to it anywhere, anytime - but because of limited audio capabilities, most of the times you just got “Sorry, I didn’t quite get that”

People have voice assistant PTSD thanks to Siri.

Then Amazon launched Echo, confined to your home, in fact the kitchen initially, where you had specific use cases, “set the timer”. Won the voice assistants’ space.

Similarly, instead of recording everything everywhere (like Tab), they limited it to only at the workplace/meetings, it’s less of a privacy threat and more a productivity tool.

Positioning makes a lot of difference.

Having a tap on you at all times can be scary and invasive to your privacy, but what if it was limited to your company meetings?

AI features: Audio transcriptions, meeting summaries, notes.

AI-UX Interactions

Voice input to listen to everything.

TBD (not yet launched)

5. [Secret trio product]

Power-puff girls, silicon valley edition — source: author

Overview

Apparently, Sam Altman wants to build the “iPhone for AI”.

Nothing more, nada, no public information. It’s probably not real too.

So why did I mention it? Just tech-gossip :)

The Game-changer move

The people involved.

#1 AI-entrepreneur (Sam, do I need to say who?).

#1 Designer (Jony Ive, Apple’s OG designer).

#1 Investor (Masayoshi, Softbank).

Take my money already!

AI-UX Interactions

Nothing exists but I’m already simping.

Another piece of hot gossip — Sam Altman said “we probably won't need a new device”. The type of app he envisions for the future is AI agents that will live in the cloud. He describes the futuristic app as ”super-competent colleague that knows absolutely everything about my whole life, every email, every conversation I've ever had, but doesn't feel like an extension”.

But that’s just a discussion on where the calculations are being done. Consumer products could still exist to provide a screen-free experience. These new products would then be a way to access the cloud.

More on this towards the end of the newsletter.

6. Apple’s ReALM

Tim’s cooking — source: author

Overview

It’s like Siri but but can also view & understand everything on the screen.

Apple published it as a research paper, so no demos…yet (but wait 5 weeks, WWDC is just around the corner 🤞)

The Game-changer move

The technical approach.

It’s a tiny model that provides “what’s on my screen?” context to the other AI models, like Siri.

It creates a text-based representation of the visual layout, labelling everything on the screen and its location.

It uses an efficient text-only architecture, rather than multi-modal models.

Because of this approach, they perform better in “resolution reference” than GPT4.

Resolution reference is understanding ambiguous phrases - “they”, ”that”, “bottom one”, “number on screen”

Sample Interactions between a user and an agent - source Medium

AI-UX Interactions

No user interactions, just existing in the matrix. It’s like a spy-ware that you can talk to naturally. Siri on steroids soon.

7. Meta Ray Bans

Meta Ray Bans worn by the one and only — source: Telegraph

Overview

From Clark Kent to Superman, the magic of Meta’s Ray Bans.

It’s a camera and AI in a frame.

It’s got style too, unlike the Oculus which block half of your face.

The Game-changer move

Linking the glasses with their social apps.

Meta has always been focused on communication, changing the way people communicate.

Looking at the glasses from the same POV, they aren’t just an AI wearable anymore. It’s not just ChatGPT in a box, I’m sure there’s more to it.

The current feature of video-calling and sharing your POV directly to Instagram is already augmenting our communication. It’s feels more authentic, it’s more about sharing what I'm seeing, LIVE.

Do I think meta-glasses are an intermediatory step between smart phones and metaverse? Yes.

AI-UX Interactions

I’ve tried out the current version and they are gooood!

You can listen to music without earphones (with their close-to-ear speaker), that too without disturbing your desk neighbours.

The camera quality is impressive, better video quality than the Samsung phone I’ve been for 3 years.

“Hey Meta” voice command to capture photos & videos.

Coming soon, voice commands to analyze what you’re viewing too.

8. Brilliant Lab’s Frame

Frame demo — view original video

Overview

Daily-wear glasses with a screen, camera and AI.

It’s multi-modal and open source

The Game-changer move

They have OpenAI for visual analysis, Whisper for translating and listening, Perplexity for searching the web.

But they went one step ahead - allowing devs to build for the glasses.

They have their own AR studio extension using which you can control the various sensors - camera, microphone, display etc. and build your own app.

Kind of like the current app store, but for glasses. The possibilities are endless.

Imaging star gazing without having to hold your phone up.

Imagine being able to shop by just “looking” at the barcodes.

Imagine being able to create a mood-board by capturing images all around the world.

All this is possible with your phone, but now, without your phone too.

Frame is the Android for glasses, Meta Ray Bans feels like the iOS.

AI-UX Interactions

Display to show text and vectors in the glasses itself.

Microphone to listen to any voice commands or other people (to translate live speech)

Motion sensor as gestures — tap, pitch (up-down angle), roll (tilting head left-right) and heading (direction looking at)

Motion sensor gestures for Frame — source:

That’s it. That’s the top consumer AI products and their game-changing moves. Thank you for reading this one!

Instead of advertising about the release of new ai-ux interactions, I thought I’d make it more meaningful - highlighting the trends and hot interactions.

🔥 Hot & spicy AI-UX: AI in a smartphone

Have you seen the Samsung S24? I just got access to it and… IT’S GOOD!

Fluid AI integration hits the spot 🤤

You can circle to search anything on the screen

You can edit the text from within the keyboard.

You can edit photos seamlessly — time to remove your ex from photos?

Circle to search - source: aiverse

Translation in keyboard - source: aiverse

Editing photos with AI - source: aiverse

Although, it’s getting there. Samsung’s AI still feels like an additional “magic button”.

Quick note - You can find all the high-quality Samsung S24 Ultra interactions now live on aiverse.

With AI, we’re living in world of promises, that’s super clear.

🤔 Making sense of the future AI promise

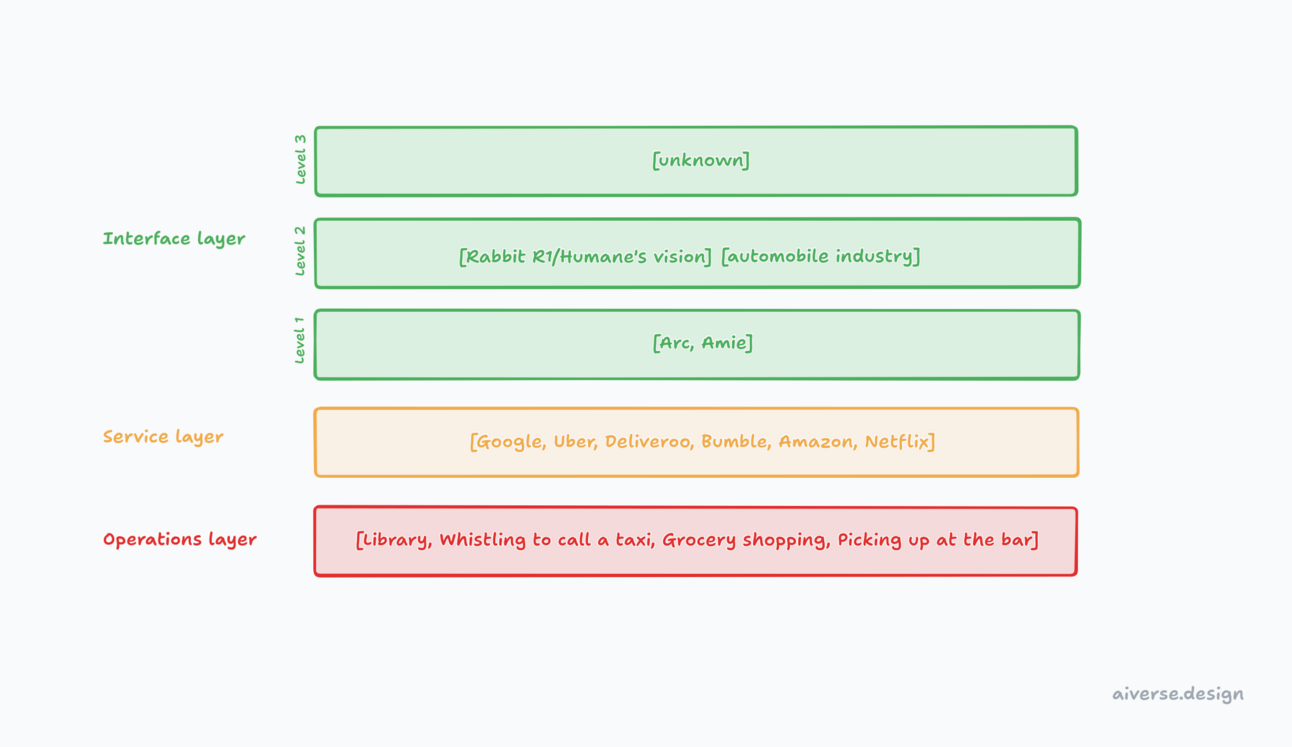

We talked about the future promise of AI – “Screenless UX” or “Interface layer” in our part 1 of Consumer AI. Here’s a quick catch-up —

Operations layer - the on-ground services

Service layer - commoditized operations layers by providing you sit-at-home apps to order these services like Uber & Deliveroo

Interface layer - will commoditize service layer by integrating service apps together to provide one experience/service.

Example - A software version of Ikea is Pinterest. Why would users browse Amazon when you can “envision” your dream house and order stuff accordingly, in that interface.

A small use case but the future is grander.

Screenless UX is a subset of the interface layer. Or it could be the same thing. Depends on whether “screens” are existent in the long-term future.

Some more thoughts

I tried to come up with examples of how things are evolving right now —

We used to go to the library to research (unless you were born after the Instagram era, then you probably browse Tiktok and won’t know what a library is - a physical place with books and a ‘Shhhhhhh’ sign)

Now we Google and search the world wide web.

Arc is a Level 1 Interface layer app — you don’t have to “search” yourself, you just tell what you’re looking for and the app gets you the answer compiling the best + relevant results.

Arc search in action — source: Arc

Another example —

Back in the 90s, people used their rizz (the gen-z term for…umm… charisma) to pick up girls at the bar. Or they met someone at a house party and awed them with their anecdotes.

We now have Tinder/Bumble/Hinge and the love-of-my-life is just one swipe away.

The future? Imagine a world where there’s a cloned version of you as a GPT that knows your interests, attachment style, what you’re going through right now, your hobbies etc. and you get a pre-filtered list of potential people that you can swipe on (because we all know you’d still want to “check them out”).

Swipe right, Match! A date is set, synced with both your calendars. You can now meet and vibe the old-fashioned style (or suffer through an awkward date when you shake her hand…as she was going for the bread)

Level 3 of the interface layer is unknown. Level 2 is a vision - of which some great examples exist in the automobile industry. I think it would make for a great inspiration source, a breakdown I’m excited to research on. Level 1 is taking place right now.

The biggest question now is, who’s going to win the Interface layer?

and secondly, how do you design for the Interface layer?

👿 The other side of the AI promise

But like all stories and beliefs, there are 2 sides. What if the at the end of the day, these AI wearables & hardware are just toys?

Google I/O is in 1 week. WWDC is in 5 weeks.

Maybe these companies are just rushing to get some eyes and $$$.

As Merci Grace has put it, the new landscape change feels “less like mobile apps in 2008 and the more like the rise of the cloud”

And honestly yes, it is true. Humane, for example, is hardware-first but all it’s AI functionalities run on the cloud. All user requests go to the cloud; the main reason why you’re left waiting awkwardly in the silence.

Even Sam Altman believes cloud will be the place.

But that’s just the backend. The place where the calculation is being done. It’s ‘cloud vs local’ fight, not really a ‘new device vs no new device’.

All the new devices can be the “time-to-ring-the-Cloud-god device”; providing a screen-free experience.

I believe the cloud theory is specific to prosumer apps or enterprise apps. The new devices aren’t there to replace the existing ones - they’re just so powerful and flexible - but for day-to-day use cases, for non-tech consumers, the new devices (the vision at least) might suffice.

Maybe the new devices aren’t for the tech nerds like us, maybe they’re just in the wrong user group - what if blind people used the multi-modal functionality in their glasses? What if the Rabbit R1 was a toy for children to learn and explore their curiosity?

In fact, I predict that AI will be mobile first, locally based. It just seems obvious - chips are getting powerful, cloud is getting expensive.

The models will run locally “aiOS” mobile first. Privacy problem solved too.

🤣 Memes of the day

original meme from the crypto land via Milkroad

- a designer in the aiverse a.k.a mr. keeping the succulent alive

P.S — Wait wait, time for quick feedback? What did you think of this piece? Reply to this email to let me know, do you think you learnt something new?

P.P.S. — As always, don’t forget to invite your other designer friends onboard the Voyager - we have a few empty window seats on this spaceship 😉

Reply